大数据学习

bigdata learning

Toggle navigation

大数据学习

主页

openGauss数据库

Flume

MongoDB

Hadoop

数据库实验

Kafka

Zookeeper

Hbase

Manual

Spark

Neo4j

InfluxDB

RabbitMQ

Flink

About Me

归档

标签

04-Hadoop-HDFS-JavaApi-Maven

Hadoop

HDFS

2022-10-13 13:21:43

446

0

0

bigdata

Hadoop

HDFS

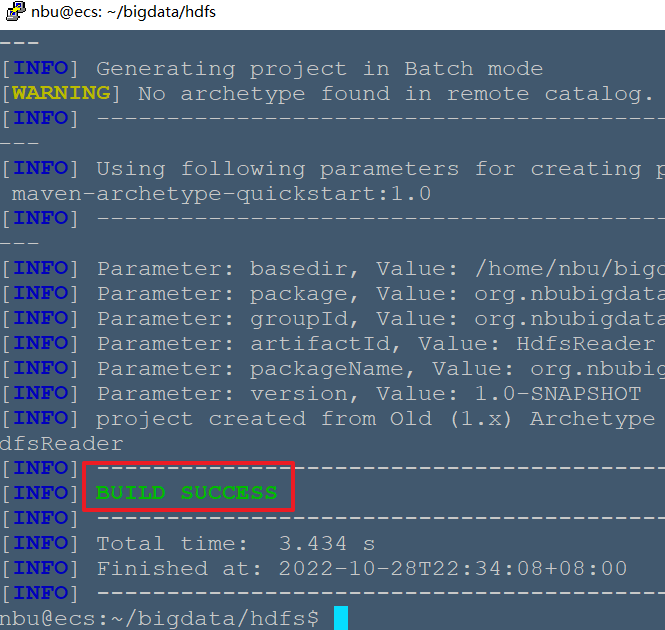

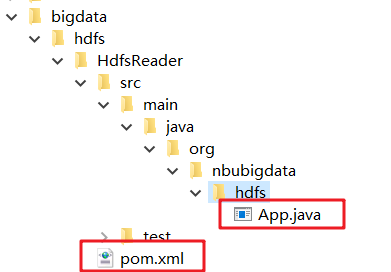

# HDFS文件读写(JAVA API Maven) ## 读HDFS文件 ### 1. 启动hdfs并向hdsf中存入文件text.txt text.txt的内容为"hello nbu", 并将text.txt保存至HDFS中 ```bash nbu@ecs:~$ echo "hello nbu" > ~/text.txt nbu@ecs:~$ /usr/local/hadoop/bin/hdfs dfs -put ~/text.txt /user/nbu/text.txt ``` ### 2. 在~目录下建立hdfs工程文件 ```bash nbu@ecs:~$ mkdir -p bigdata/hdfs nbu@ecs:~$ cd bigdata/hdfs nbu@ecs:~/bigdata/hdfs$ mvn archetype:generate "-DgroupId=org.nbubigdata.hdfs" "-DartifactId=HdfsReader" "-DarchetypeArtifactId=maven-archetype-quickstart" "-DinteractiveMode=false" ``` 出现 BUILD SUCCESS 表示成功  该工程名为HdfsReader,在~/bigdata/hdfs下创建了HdfsReader java源文件位置: ~/bigdata/hdfs/HdfsReader/src/main/java/org/nbubigdata/hdfs/App.java pom文件位置: /bigdata/hdfs/HdfsReader/pom.xml  ### 3. 编写java程序 进入~/bigdata/hdfs/HdfsReader 文件夹,修改pom.xml如下: ```xml <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.nbubigdata.hdfs</groupId> <artifactId>HdfsReader</artifactId> <packaging>jar</packaging> <version>1.0-SNAPSHOT</version> <name>HdfsReader</name> <url>http://maven.apache.org</url> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <maven.compiler.source>1.7</maven.compiler.source> <maven.compiler.target>1.7</maven.compiler.target> <hadoopVersion>2.7.1</hadoopVersion> </properties> <dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>3.8.1</version> <scope>test</scope> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.11</version> <scope>test</scope> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>${hadoopVersion}</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>${hadoopVersion}</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>${hadoopVersion}</version> </dependency> </dependencies> <build> <plugins> <plugin> <artifactId>maven-assembly-plugin</artifactId> <configuration> <archive> <manifest> <mainClass>org.nbubigdata.hdfs.App</mainClass><!-- 这里填入口类 --> </manifest> <manifestEntries> <Class-Path>.</Class-Path> </manifestEntries> </archive> <descriptorRefs> <descriptorRef>jar-with-dependencies</descriptorRef> </descriptorRefs> </configuration> <executions> <execution> <id>make-assembly</id> <phase>package</phase> <goals> <goal>single</goal> </goals> </execution> </executions> </plugin> </plugins> </build> </project> ``` [pom.xml](https://hexo-img.obs.cn-east-3.myhuaweicloud.com/img/202210281954437.xml) 编写java程序 src/main/java/org/nbubigdata/hdfs/App.java,参考如下: ```java package org.nbubigdata.hdfs; /** * Hello world! * */ import java.io.BufferedReader; import java.io.InputStreamReader; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.fs.FSDataInputStream; public class App { public static void main(String[] args) { try { Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://127.0.0.1:9000"); conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem"); FileSystem fs = FileSystem.get(conf); Path file = new Path("/user/nbu/text.txt"); FSDataInputStream getIt = fs.open(file); BufferedReader d = new BufferedReader(new InputStreamReader(getIt)); String content = d.readLine(); //读取文件一行 System.out.println(content); d.close(); //关闭文件 fs.close(); //关闭hdfs } catch (Exception e) { e.printStackTrace(); } } } ``` [App.java](https://hexo-img.obs.cn-east-3.myhuaweicloud.com/img/202210281951283.java) ### 4. 打包编译 ```bash #下载依赖包 nbu@ecs:~/bigdata/hdfs/HdfsReader$ mvn install #jar程序打包 nbu@ecs:~/bigdata/hdfs/HdfsReader$ mvn assembly:assembly #运行jar程序 nbu@ecs:~/bigdata/hdfs/HdfsReader$ cd target/ nbu@ecs:~/bigdata/hdfs/HdfsReader/target$ java -jar HdfsReader-1.0-SNAPSHOT-jar-with-dependencies.jar ``` > mvn install与 mvn install 成功后,会有Build Success出现 成功运行,会出成功打印 hello nbu  ## 写HDFS文件 过程与“读HDFS文件”类似 java代码参考如下: ```java package org.nbubigdata.hdfs; /** * Hello world! * */ import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.FSDataOutputStream; import org.apache.hadoop.fs.Path; public class App { public static void main(String[] args) { try { Configuration conf = new Configuration(); conf.set("fs.defaultFS","hdfs://127.0.0.1:9000"); conf.set("fs.hdfs.impl","org.apache.hadoop.hdfs.DistributedFileSystem"); FileSystem fs = FileSystem.get(conf); byte[] buff = "Hello world".getBytes(); // 要写入的内容 String filename = "/usr/nbu/write_text.txt"; //要写入的文件名 FSDataOutputStream os = fs.create(new Path(filename)); os.write(buff,0,buff.length); System.out.println("Create:"+ filename); os.close(); fs.close(); } catch (Exception e) { e.printStackTrace(); } } } ``` [App.java](https://hexo-img.obs.cn-east-3.myhuaweicloud.com/img/202210282248194.java) 运行成功后: ```bash # hdfs查看 nbu@ecs:~/bigdata/hdfs/HdfsCreater$/usr/local/hadoop/bin/hdfs dfs -ls /usr/nbu Found 1 items -rw-r--r-- 3 nbu supergroup 11 2022-04-13 14:32 /usr/nbu/write_text.txt ```

上一篇:

04-HBase-命令行操作实例-01

下一篇:

04-Hive数据库创建-删除

文档导航